Difference between revisions of "Cell Devel Nodes"

| Line 16: | Line 16: | ||

32GB of RAM per node. The Intel nodes have two 2.53GHz 4core Xeon X5550 CPU's with 48GB of RAM per node. | 32GB of RAM per node. The Intel nodes have two 2.53GHz 4core Xeon X5550 CPU's with 48GB of RAM per node. | ||

| − | ==Login== | + | ===Login=== |

First login via ssh with your scinet account at <tt>login.scinet.utoronto.ca</tt>, and from there you can proceed to <tt>cell-srv01</tt> which | First login via ssh with your scinet account at <tt>login.scinet.utoronto.ca</tt>, and from there you can proceed to <tt>cell-srv01</tt> which | ||

is currently the gateway machine. | is currently the gateway machine. | ||

| − | ==Compile/Devel/Compute Nodes== | + | ===Compile/Devel/Compute Nodes=== |

Currently you can log into any of the 14 nodes <tt>blade01..blade14</tt> directly to compile/test/run your code. | Currently you can log into any of the 14 nodes <tt>blade01..blade14</tt> directly to compile/test/run your code. | ||

| + | |||

| + | ===Local Disk=== | ||

| + | |||

| + | Unlike the GPC and TCS, currently you can NOT see your global <tt>/home</tt> and <tt>/scratch</tt> space so you will have to copy your code to a serpate local | ||

| + | <tt>/home</tt> dedicated for this cluster. | ||

==Programming Frameworks== | ==Programming Frameworks== | ||

Revision as of 14:37, 25 January 2010

| Cell Development Cluster | |

|---|---|

| Installed | June 2010 |

| Operating System | Linux |

| Interconnect | Infiniband |

| Ram/Node | 32 Gb |

| Cores/Node | 2 PPU + 16 SPU |

| Login/Devel Node | cell-srv01 (from login.scinet) |

| Vendor Compilers | ppu-gcc, spu-gcc |

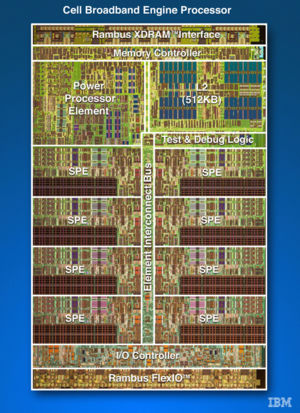

The Cell Development Cluster is a technology evaluation cluster with a combination of 14 IBM PowerXCell 8i "Cell" nodes and 8 Intel x86_64 "Nehalem" nodes. The QS22's each have two 3.2GHz "IBM PowerXCell 8i CPU's, where each CPU has 1 Power Processing Unit (PPU) and 8 Synergistic Processing Units (SPU), and 32GB of RAM per node. The Intel nodes have two 2.53GHz 4core Xeon X5550 CPU's with 48GB of RAM per node.

Login

First login via ssh with your scinet account at login.scinet.utoronto.ca, and from there you can proceed to cell-srv01 which is currently the gateway machine.

Compile/Devel/Compute Nodes

Currently you can log into any of the 14 nodes blade01..blade14 directly to compile/test/run your code.

Local Disk

Unlike the GPC and TCS, currently you can NOT see your global /home and /scratch space so you will have to copy your code to a serpate local /home dedicated for this cluster.

Programming Frameworks

Currently there are two programming frameworks to use.