Difference between revisions of "BGQ"

| Line 28: | Line 28: | ||

=== 5D Torus (network) === | === 5D Torus (network) === | ||

| − | The network topology for Blue Gene/Q is a five-dimensional (5D) torus or mesh, with direct links between the nearest neighbors in the ±A, ±B, ±C, ±D, and ±E directions. | + | The network topology for Blue Gene/Q is a five-dimensional (5D) torus or mesh, with direct links between the nearest neighbors in the ±A, ±B, ±C, ±D, and ±E directions. As such there are only a few optimum job sizes that will use the network efficiently. They |

| − | + | are listed below. | |

| + | {|border="1" cellspacing="0" cellpadding="2" | ||

| + | | '''Node Boards ''' | ||

| + | | '''Compute Nodes''' | ||

| + | | '''Cores''' | ||

| + | | '''Torus Dimensions''' | ||

| + | |- | ||

| + | | 1 | ||

| + | | 32 | ||

| + | | 512 | ||

| + | | 2x2x2x2x2 | ||

| + | |- | ||

| + | | 2 | ||

| + | | 64 | ||

| + | | 1024 | ||

| + | | 2x2x4x2x2 | ||

| + | |} | ||

== *** WAT2Q SPECIFIC **** == | == *** WAT2Q SPECIFIC **** == | ||

Revision as of 12:11, 29 August 2012

| Blue Gene/Q (BGQ) | |

|---|---|

| Installed | August 2012 |

| Operating System | RH6.3, CNK (Linux) |

| Number of Nodes | 2048(32,768 cores), 512 (8,192 cores) |

| Interconnect | 5D Torus (jobs), QDR Infiniband (I/O) |

| Ram/Node | 16 Gb |

| Cores/Node | 16 (64 threads) |

| Login/Devel Node | bgq01,bgq02 |

| Vendor Compilers | bgxlc, bgxlf |

| Queue Submission | Loadleveler |

Specifications

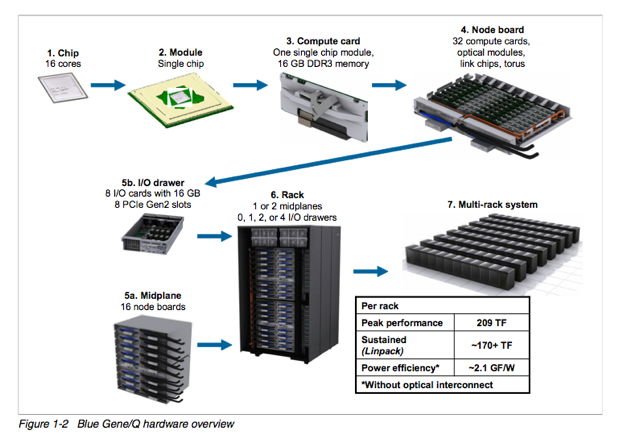

BGQ is an extremely dense and energy efficient 3rd generation IBM Supercomputer built around a system on a chip compute node that has a 16core 1.6GHz PowerPC based CPU (PowerPC A2) with 16GB of Ram and runs a very lightweight Linux OS called CNK. The nodes (with 16 core apieces) are bundled in groups of 32 into node boards and then 16 of these boards make up a midplane with 2 midplanes per rack, or 16,348 cores and 16 TB of RAM per rack. The compute nodes are all connected together using a custom 5D highspeed interconnect. Each rack has 16 Power7 I/O nodes that run a full Redhat Linux OS that manages the compute nodes and mounts the GPFS filesystem.

Jobs

BGQ job size is typically determined by midplanes (512 nodes or 8192 cores), however sub-blocks can be used to further subdivide midplanes with a minimum of one IO node per block. In SciNet's configuration (with 8 I/O nodes per midplane) this allows 64 nodes (1024 cores) to be the smallest job size. A block is setup to match a jobs configuration (number of nodes and and network configuration) and partitioned and booted specifically for that job. If a job with the same requirements matches an existing running job then the nodes are not required to be rebooted/repartitioned, however if the any of the parameters are different the block needs to be recreated and rebooted to match the new jobs specifications. When running with loadleveler this happens automatically, and due to the lightweight OS used on the compute nodes, happens very quickly.

5D Torus (network)

The network topology for Blue Gene/Q is a five-dimensional (5D) torus or mesh, with direct links between the nearest neighbors in the ±A, ±B, ±C, ±D, and ±E directions. As such there are only a few optimum job sizes that will use the network efficiently. They are listed below.

| Node Boards | Compute Nodes | Cores | Torus Dimensions |

| 1 | 32 | 512 | 2x2x2x2x2 |

| 2 | 64 | 1024 | 2x2x4x2x2 |

*** WAT2Q SPECIFIC ****

Compile

/bgsys/drivers/V1R1M1/ppc64/comm/xl/bin/mpich2version /bgsys/drivers/V1R1M1/ppc64/comm/xl/bin/mpixlc /bgsys/drivers/V1R1M1/ppc64/comm/xl/bin/mpixf90

Run a Job

When not using loadleveler there is a direct launch program called runjob on BGQ that acts a lot like mpirun/mpiexec. The "block" argument is the predifined group of nodes that are already booted. See the next section on how to create these blocks manually. Note that a block does not need to be rebooted between jobs, only if the number of nodes or network parameters are need to be changed.

runjob --block R00-M0-N03-32 --ranks-per-node=16 --np 512 --cwd=/gpfs/DDNgpfs3/xsnorthrup/osu_bgq --exe=/gpfs/DDNgpfs3/xsnorthrup/osu_bgq/osu_mbw_mr --args file.in

or a using the other form which is convenient if your application has multiple arguments

runjob --block R00-M0-N03-32 --ranks-per-node=16 --np 512 --cwd=/gpfs/DDNgpfs3/xsnorthrup/osu_bgq : /gpfs/DDNgpfs3/xsnorthrup/osu_bgq/osu_mbw_mr file.in

also the flag

--verbose #

where # is from 1-7 is very useful it you are trying to debug an application.

To see running jobs and the status of available blocks use:

list_jobs list_blocks

Setup blocks

To reconfigure the BGQ nodes use the bg_console

bg_console

There are various options to create block types (section 3.2 in the BGQ admin manual), but the smallest is created using the following command:

gen_small_block <blockid> <midplane> <cnodes> <nodeboard> gen_small_block R00-M0-N03-32 R00-M0 32 N03

The block then needs to be booted using:

allocate R00-M0-N03-32

If those resources are already booted into another block, that block must be freed before the new block can be allocated.

free R00-M0-N03

There are many other functions in bg_console:

help all

The BGQ default nomenclature for hardware is as follows:

(R)ack - (M)idplane - (N)ode board or block - (J)node - (C)ore

So R00-M01-N03-J00-C02 would correspond to the first rack, second midplane, 3rd block, 1st node, and second core.

I/O

GPFS