Difference between revisions of "BGQ"

| Line 18: | Line 18: | ||

==********** NOTE **********== | ==********** NOTE **********== | ||

| − | + | The BGQ is in Beta testing and as such the evironemnt is still under development as is the wiki information contained here. | |

=== Support Email === | === Support Email === | ||

| Line 81: | Line 81: | ||

The development nodes for the BGQ are '''bgqdev-fen1''' for the half-rack development system and '''bgq-fen1''' for the 2-rack production system. | The development nodes for the BGQ are '''bgqdev-fen1''' for the half-rack development system and '''bgq-fen1''' for the 2-rack production system. | ||

You can login to them from the regular '''login.scinet.utoronto.ca''' login nodes or directly using, '''bgqdev.scinet.utoronto.ca'''. | You can login to them from the regular '''login.scinet.utoronto.ca''' login nodes or directly using, '''bgqdev.scinet.utoronto.ca'''. | ||

| − | The nodes are | + | The nodes are Power7 running Linux which serve as compilation and submission hosts for the BGQ. Programs are cross-compiled for the BGQ on these nodes and then submitted to the queue using loadleveler. |

==== Compilers ==== | ==== Compilers ==== | ||

The BGQ uses IBM XL compilers to cross-compile code for the BGQ. Compilers are available for FORTRAN, C, and C++. The compilers by default produce | The BGQ uses IBM XL compilers to cross-compile code for the BGQ. Compilers are available for FORTRAN, C, and C++. The compilers by default produce | ||

| − | static binaries, however with BGQ it is possible to now use dynamic libraries as well. The compilers follow the XL | + | static binaries, however with BGQ it is possible to now use dynamic libraries as well. The compilers follow the XL conventions with the prefix '''bg''', |

| − | so '''bgxlc''' and '''bgxlf''' are the C and FORTRAN compilers respectively. Most users however will use the MPI variants which are | + | so '''bgxlc''' and '''bgxlf''' are the C and FORTRAN compilers respectively. Most users however will use the MPI variants which are available by loading |

| + | the '''mpich2''' module. | ||

<pre> | <pre> | ||

| − | + | module load mpich2 | |

| − | |||

| − | |||

</pre> | </pre> | ||

== Job Submission == | == Job Submission == | ||

| − | As the BGQ architecture is different from the development nodes, the only way to test your program is to submit a job to the BGQ. Jobs are submitted through loadleveler using '''runjob''' which in many ways similar to mpirun or mpiexec | + | As the BGQ architecture is different from the development nodes, the only way to test your program is to submit a job to the BGQ. Jobs are submitted through loadleveler using '''runjob''' which in many ways similar to mpirun or mpiexec. As shown above in the network topology overview, there are only a few optimum job size configurations which is also further constrained by each block requiring a minimum of one IO node. In SciNet's configuration (with 8 I/O nodes per midplane) this allows 64 nodes (1024 cores) to be the smallest block size. Normally a block size matches the job size to offer fully dedicated resources to the job. Smaller jobs can be run within the same block however this results in shared resources (network and IO) and are referred to as sub-block jobs and are described in more detail below. |

| − | each block requiring a minimum of one IO node. In SciNet's configuration (with 8 I/O nodes per midplane) this allows 64 nodes (1024 cores) to be the smallest block size. | ||

| − | |||

| − | in shared resources (network and IO) and are referred to as sub-block jobs. | ||

| − | === | + | === runjob === |

| − | Job submission is done through loadleveler with a few blue gene specific commands. The command "bg_shape" is in number of nodes, not cores, so a bg_shape=32 would be 512 cores. | + | '''runjob''' on BGQ acts a lot like mpirun/mpiexec and is the launcher to start jobs on BGQ. The "block" argument is the predefined group of nodes |

| + | that are already booted. When using loadleveler this is determined for you. For this example block R00-M0-N03-64 is made up of 2 node cards | ||

| + | with 64 compute nodes (1024 cores). | ||

| + | |||

| + | <pre> | ||

| + | runjob --block BLOCK_ID --ranks-per-node=16 --np 1024 --cwd=$PWD : $PWD/code -f file.in | ||

| + | </pre> | ||

| + | |||

| + | also the flag | ||

| + | |||

| + | <pre> | ||

| + | --verbose # | ||

| + | </pre> | ||

| + | |||

| + | where # is from 1-7 is very useful it you are trying to debug an application. | ||

| + | |||

| + | |||

| + | === Batch Jobs === | ||

| + | |||

| + | Job submission is done through loadleveler with a few blue gene specific commands. The command "bg_shape" is in number of nodes, not cores, so a bg_shape=32 would be 512 cores. | ||

<pre> | <pre> | ||

| Line 119: | Line 134: | ||

# Launch all BGQ jobs using runjob | # Launch all BGQ jobs using runjob | ||

| + | export OMP_NUM_THREADS=1 | ||

| + | |||

runjob --cwd=$SCRATCH/ : $HOME/mycode.exe myflags | runjob --cwd=$SCRATCH/ : $HOME/mycode.exe myflags | ||

</pre> | </pre> | ||

| + | To submit to the queue use | ||

| − | + | <pre> | |

| + | llsubmit myscript.sh | ||

| + | </pre> | ||

| − | + | to see running jobs | |

| + | |||

| + | <pre> | ||

| + | llq | ||

| + | </pre> | ||

| − | + | to cancel a job use | |

| − | |||

| − | |||

| − | |||

<pre> | <pre> | ||

| − | + | llcancel JOBID | |

</pre> | </pre> | ||

| − | + | and to look at details of the bluegene use | |

<pre> | <pre> | ||

| − | + | llbgstatus | |

</pre> | </pre> | ||

| − | + | ||

| + | === Interactive jobs === | ||

| + | |||

| + | TBD | ||

| + | |||

| + | |||

| + | === Sub-block jobs === | ||

To run a sub-block job (ie share a block) you need to specify a "--corner" within the block to start the job and a 5D AxBxCxDxE "--shape". | To run a sub-block job (ie share a block) you need to specify a "--corner" within the block to start the job and a 5D AxBxCxDxE "--shape". | ||

| Line 150: | Line 177: | ||

runjob --block R00-M0-N03-64 --corner R00-M0-N03-J00 --shape 1x1x1x2x2 --ranks-per-node=16 --np 64 --cwd=$PWD : $PWD/code -f file.in | runjob --block R00-M0-N03-64 --corner R00-M0-N03-J00 --shape 1x1x1x2x2 --ranks-per-node=16 --np 64 --cwd=$PWD : $PWD/code -f file.in | ||

runjob --block R00-M0-N03-64 --corner R00-M0-N03-J04 --shape 2x2x2x2x1 --ranks-per-node=16 --np 256 --cwd=$PWD : $PWD/code -f file.in | runjob --block R00-M0-N03-64 --corner R00-M0-N03-J04 --shape 2x2x2x2x1 --ranks-per-node=16 --np 256 --cwd=$PWD : $PWD/code -f file.in | ||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

</pre> | </pre> | ||

| Line 163: | Line 183: | ||

The BGQ has its own dedicated 500TB GPFS filesystem for both $HOME and $SCRATCH. | The BGQ has its own dedicated 500TB GPFS filesystem for both $HOME and $SCRATCH. | ||

| + | |||

| Line 171: | Line 192: | ||

[http://www.redbooks.ibm.com/redbooks/SG247948/wwhelp/wwhimpl/js/html/wwhelp.htm BGQ Application Development ] | [http://www.redbooks.ibm.com/redbooks/SG247948/wwhelp/wwhimpl/js/html/wwhelp.htm BGQ Application Development ] | ||

| + | |||

| + | |||

| + | |||

| + | |||

| + | |||

Revision as of 09:42, 3 October 2012

| Blue Gene/Q (BGQ) | |

|---|---|

| Installed | August 2012 |

| Operating System | RH6.2, CNK (Linux) |

| Number of Nodes | 2048(32,768 cores), 512 (8,192 cores) |

| Interconnect | 5D Torus (jobs), QDR Infiniband (I/O) |

| Ram/Node | 16 GB |

| Cores/Node | 16 (64 threads) |

| Login/Devel Node | bgqdev-fen1,bgq-fen1 |

| Vendor Compilers | bgxlc, bgxlf |

| Queue Submission | Loadleveler |

********** NOTE **********

The BGQ is in Beta testing and as such the evironemnt is still under development as is the wiki information contained here.

Support Email

Pleas use bgq-support@scinethpc.ca for BGQ specific inquiries.

Specifications

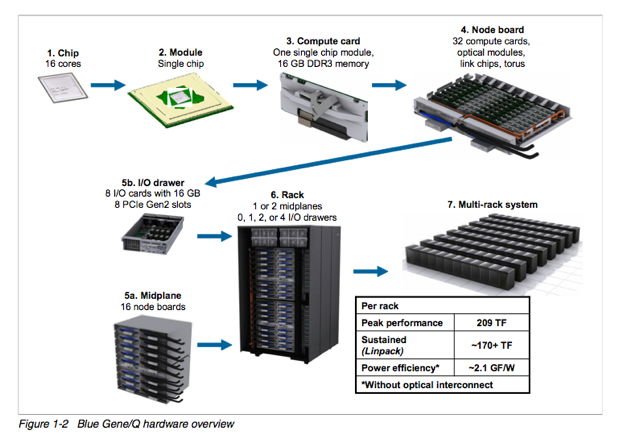

BGQ is an extremely dense and energy efficient 3rd generation blue gene IBM supercomputer built around a system on a chip compute node that has a 16core 1.6GHz PowerPC based CPU (PowerPC A2) with 16GB of Ram. The nodes are bundled in groups of 32 into a node board (512 cores), and 16 boards make up a midplane (8192 cores) with 2 midplanes per rack, or 16,348 cores and 16 GB of RAM per rack. The compute nodes run a very lightweight linux based operating system called CNK. The compute nodes are all connected together using a custom 5D torus highspeed interconnect. Each rack has 16 I/O nodes that run a full Redhat Linux OS that manages the compute nodes and mounts the filesystem. SciNet has 2 BGQ systems, a half rack 8192 core development system, and a 2 rack 32,768 core production system.

5D Torus Network

The network topology of Blue/Gene Q is a five-dimensional (5D) torus, with direct links between the nearest neighbors in the ±A, ±B, ±C, ±D, and ±E directions. As such there are only a few optimum block sizes that will use the network efficiently.

| Node Boards | Compute Nodes | Cores | Torus Dimensions |

| 1 | 32 | 512 | 2x2x2x2x2 |

| 2 (adjacent pairs) | 64 | 1024 | 2x2x4x2x2 |

| 4 (quadrants) | 128 | 2048 | 2x2x4x4x2 |

| 8 (halves) | 256 | 4096 | 4x2x4x4x2 |

| 16 (midplane) | 512 | 8192 | 4x4x4x4x2 |

| 32 (1 rack) | 1024 | 16384 | 4x4x4x8x2 |

| 64 (2 racks) | 2048 | 32768 | 4x4x8x8x2 |

Login/Devel Nodes

The development nodes for the BGQ are bgqdev-fen1 for the half-rack development system and bgq-fen1 for the 2-rack production system. You can login to them from the regular login.scinet.utoronto.ca login nodes or directly using, bgqdev.scinet.utoronto.ca. The nodes are Power7 running Linux which serve as compilation and submission hosts for the BGQ. Programs are cross-compiled for the BGQ on these nodes and then submitted to the queue using loadleveler.

Compilers

The BGQ uses IBM XL compilers to cross-compile code for the BGQ. Compilers are available for FORTRAN, C, and C++. The compilers by default produce static binaries, however with BGQ it is possible to now use dynamic libraries as well. The compilers follow the XL conventions with the prefix bg, so bgxlc and bgxlf are the C and FORTRAN compilers respectively. Most users however will use the MPI variants which are available by loading the mpich2 module.

module load mpich2

Job Submission

As the BGQ architecture is different from the development nodes, the only way to test your program is to submit a job to the BGQ. Jobs are submitted through loadleveler using runjob which in many ways similar to mpirun or mpiexec. As shown above in the network topology overview, there are only a few optimum job size configurations which is also further constrained by each block requiring a minimum of one IO node. In SciNet's configuration (with 8 I/O nodes per midplane) this allows 64 nodes (1024 cores) to be the smallest block size. Normally a block size matches the job size to offer fully dedicated resources to the job. Smaller jobs can be run within the same block however this results in shared resources (network and IO) and are referred to as sub-block jobs and are described in more detail below.

runjob

runjob on BGQ acts a lot like mpirun/mpiexec and is the launcher to start jobs on BGQ. The "block" argument is the predefined group of nodes that are already booted. When using loadleveler this is determined for you. For this example block R00-M0-N03-64 is made up of 2 node cards with 64 compute nodes (1024 cores).

runjob --block BLOCK_ID --ranks-per-node=16 --np 1024 --cwd=$PWD : $PWD/code -f file.in

also the flag

--verbose #

where # is from 1-7 is very useful it you are trying to debug an application.

Batch Jobs

Job submission is done through loadleveler with a few blue gene specific commands. The command "bg_shape" is in number of nodes, not cores, so a bg_shape=32 would be 512 cores.

#!/bin/sh # @ job_name = bgsample # @ job_type = bluegene # @ comment = "BGQ Job By Size" # @ error = $(job_name).$(Host).$(jobid).err # @ output = $(job_name).$(Host).$(jobid).out # @ bg_size = 32 # @ wall_clock_limit = 30:00 # @ bg_connectivity = Torus # @ queue # Launch all BGQ jobs using runjob export OMP_NUM_THREADS=1 runjob --cwd=$SCRATCH/ : $HOME/mycode.exe myflags

To submit to the queue use

llsubmit myscript.sh

to see running jobs

llq

to cancel a job use

llcancel JOBID

and to look at details of the bluegene use

llbgstatus

Interactive jobs

TBD

Sub-block jobs

To run a sub-block job (ie share a block) you need to specify a "--corner" within the block to start the job and a 5D AxBxCxDxE "--shape". The following example shows 2 jobs sharing the same block.

runjob --block R00-M0-N03-64 --corner R00-M0-N03-J00 --shape 1x1x1x2x2 --ranks-per-node=16 --np 64 --cwd=$PWD : $PWD/code -f file.in runjob --block R00-M0-N03-64 --corner R00-M0-N03-J04 --shape 2x2x2x2x1 --ranks-per-node=16 --np 256 --cwd=$PWD : $PWD/code -f file.in

Filesystem

The BGQ has its own dedicated 500TB GPFS filesystem for both $HOME and $SCRATCH.

Documentation

BGQ System Administration Guide