Using Paraview

|

WARNING: SciNet is in the process of replacing this wiki with a new documentation site. For current information, please go to https://docs.scinet.utoronto.ca |

ParaView is a powerful, parallel, client-server based visualization system that allows you to use SciNet's GPC nodes to render data on SciNet, and manipulate the results interactively on your own desktop. To use the paraview server on SciNet is much like using it locally, but there is an additional step in setting up a connection directly between your desktop and the compute nodes.

Installing ParaView

To use Paraview, you will have to have the client software installed on your system; you will need ParaView from the Paraview website. Binaries exist for Linux, Mac, and Windows systems. The client version must exactly match the version installed on the server, currently 3.12 or 3.14.1. The client version has all the functionality of the server, and can analyze data locally.

SSH Forwarding For ParaView

To interactively use the ParaView server on GPC, you will have to work some ssh magic to allow the client on your desktop to connect to the server through the scinet login nodes. The steps required are

- Have an SSH key that you can use to log into SciNet

- Submit an interactive job, with a shell on the head node that you'll be running the server on

- Start ssh forwarding

- Start paraview server

- Connecting client and server

SSH Keys

To be able to log into the compute nodes where ParaView will be running, you'll have to have an SSH key set up, as password authentication won't work. Our SSH Keys and SciNet page describes how to do this.

Log into node

The first thing to do is to go to the node from which you'll start the ParaView server. This is typically done by starting an interactive job on the GPC, perhaps on the debug queue or sandybridge large memory nodes. Paraview can in principle make use of as many nodes as you throw at it. So one might begin jobs as below:

qsub -l nodes=1:m128g:ppn=16,walltime=1:00:00 -q sandy -I

or

qsub -l nodes=2:ppn=8,walltime=1:00:00 -q debug -I

Once this job has started, you'll be placed in a shell on the head node of the job; typing `

hostname

' will tell you the name of the host, eg

$ hostname gpc-f148n089-ib0

or

$ hostname gpc-f107n045-ib0

you will need this hostname in the following steps.

Start SSH port forwarding

Once the ssh configuration is set, the port forwarding can be started with the command (on your local machine in a terminal window), using the local host name from above - here we'll take the example of gpc-f148n089-ib0:

$ export gpcnode="gpc-f148n089-ib0"

$ ssh -N -L 20080:${gpcnode}:22 -L 20090:${gpcnode}:11111 login.scinet.utoronto.ca

this command will not return anything until the forwarding is terminated, and will just look like it's sitting there. It doesn't start a remote shell or command (-N), but it will connect to login.scinet.utoronto.ca, and from there it will redirect your local (-L) port 20080 to ${gpcnode} port 22, and similarly local port 20090 to ${gpcnode} port 11111. We'll use the first for ssh'ing to the remote node (mainly for testing), and the second to conect the local paraview client to the remote paraview server.

To make sure the port forwarding is working correctly, in another window try sshing directly to the compute node from your desktop:

$ ssh -p 20080 [your-scinet-username]@localhost

and this should land you directly on the compute node. If it does not, then something is wrong with the ssh forwarding.

Start Server

Now that the tunnel is set up, on the compute node you can start the paraview server. To do this, you will have to have the following modules loaded:

$ module load Xlibraries intel gcc python openmpi paraview

(You can replace intelmpi with openmpi, and of course any module that is already loaded does have to be loaded again.)

Then start the paraview server with the intel mpirun as with any MPI job:

$ mpirun -np [NP] pvserver --use-offscreen-rendering

where NP is the number of processors; 16 processors per node on the largemem nodes, or 8 per node otherwise.

Once running, the ParaView server should output

Listen on port: 11111 Waiting for client...

Connect Client and Server

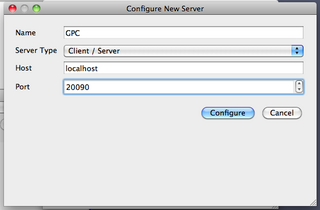

Once the server is running, you can connect the client. Start the ParaView client on your desktop, and choose File->Connect. Click `Add Server', give the server a name (say, GPC), and give the port number 20090. The other values should be correct by default; host is localhost, and the server type is Client/Server. Click `Configure'.

On the next window, you'll be asked for a command to start up the server; select `Manual', and ok.

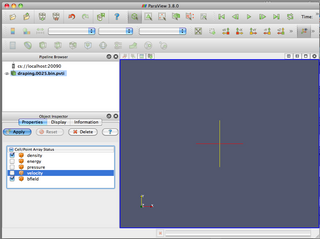

Once the server is selected, click `Connect'. On the compute node, the server should respond `Client connected'. In the client window, when you (for instance) select File->Open, you will be seeing the files on the GPC, rather than the local host.

From here, the ParaView Wiki can give you instructions as to how to plot your data.