Introduction To Performance

The Concepts of Parallel Performance

Parallel computing used to be a very specialized domain; but now even making the best use of your laptop, which almost certainly has multiple independant computing cores, requires understanding the basic concepts of performance in a parallel environment.

Most fundamentally, parallel programming allows three possible ways of getting more and better science done:

- Running your computation many times

- If you have a program that works in serial, having many processors available to you allows you to run many copies of the same program at once, improving your throughput. This (can be) a sort of trivial use of parallel computing and doesn't require very specialized hardware, but it can be extremely useful for, for instance, running parameter studies or sensitivity studies. Best of all, this is essentially guaranteed to run efficiently if your serial code runs efficiently! Because this doesn't require fancy hardware, it is a waste of resources to use the Tightly Coupled System for these sorts of tasks and instead they must be run on the General Purpose Cluster.

- Running your computation faster

- This is what most people think of as parallel computing. It can take a lot of work to make an existing code run efficiently on many processors, or to design a new code to make use of these resources, but when it works, one can achieve a substantial speedup of individual jobs. This might mean the difference between a computation running in a feasible length of time for a research project or taking years to complete --- so while it may be a lot of work, it may be your only option. To determine whether your code runs well on many processors, you need to measure speedup and efficiency; to see how many processors one should use for a given problem you must run strong scaling tests.

- Running your computation on larger problems

- One achieves speedup by using more processors on the same problem. But by running your job in parallel you may have access to more resources other than just processors --- for instance, more memory, or more disks. In this case, you may be able to run problems that simply wouldn't be possible on a single processor or a single computer; one can achieve significant sizeup. To find how large a problem one can efficiently run, one measures efficiency and runs weak scaling tests.

Of course, these aren't exclusive; one can take advantage of any combination of the above. It may be that your problem runs efficiently on 8 cores but no more; however, you may be able to get use of more processors by running many jobs to explore parameter space, and already on 8 cores you may be able to consider larger problems than you can with just one!

Throughput

Throughput is the most fundamental measure of performance, and the one that ultimately most matters to most computational scientists -- if you have N computations that you need to have done for your research project, how quickly can you get them done? Everything else we'll consider here is just a

way of increasing throughput T:

If you have many indepdendent computations to perform (such as a parameter study or a sensitivity study) you can increase throughput almost arbitrarily by running them alongside each other at the same time, limited only by the number of processors available (or the wait time in the queue, or the disk space available, or some other external resource constraint). This approach obviously doesn't work if you only have one computation to perform, or if later computations require the output from previous ones. In these cases, or when the individual jobs take infeasibly long, or cannot be performed on only one processor, one must resort to also using parallel programming techniques to parallelize the individual jobs.

Compute Time

Fundamental to everything else that follows is measuring the amount of time a computation takes on some problem size/amount of work and some number of processors

. We'll denote this by

. The easiest way to measure this time is with the time command that comes on most flavours of Unix in /bin/time or /usr/bin/time:

/bin/time myprogram ...normal program output... 658.44user 0.85system 10:59.41elapsed

The format of the times output at the end may vary from system to system, but the basic information returned will be the same. The real or elapsed time listed is the actual Wallclock time that elapsed during the run, user or cpu is the CPU time that was actually spent doing your computation, and the system time is the system time that was spent doing system-related things during the run, such as waiting for file input/output. Our goal will be to reduce the real wallclock time that the simulation takes as much as possible while still making efficient use of the resources available.

Parallel Speedup

The speedup of an individual job with some amount of work as you go from some running it serially to running on

is simply:

That is, the time it takes to run the computation on P vs on one processor. The way this is usually done is to run the parallel code on and

on

processor and take the ratio of the two times; but this is a form of cheating, as the parallel version of the code will generally have

overheads (even in the one-processor case) compared to the best available serial-only version of the code. The best thing to do in considering the efficiency of the parallelization to compare the parallel code to the best available serial code that does the same job.

If you are considering the speedup of a problem that doesn't fit onto one processor, of course, the concept of speedup can be generalized; one needn't start at .

It should go without saying that, while developing your parallel code and during performance tuning, you get the same results with multiple processors as with some `known good' serial test case; it is even easier to introduce bugs in parallel code than it is in serial code!

Efficiency

Once you have a parallel code and some timing results one can look at how efficiently you are making use of the resources as you use more and more processors.

The parallel efficiency of a computation of some fixed work size running on processors as compared to the

case is

That is, if you get a speedup of in going from one to eight processors, you are at 1.00 or 100% efficiency; anything less and you are at lower efficiency. It isn't uncommon to achieve greater than 100% parallel efficiencies for small numbers of processors for some types of problems; as you go to more processors, you also have more processor cache, and thus more of the problems data can fit into fast cache. This is called super-linear speedup and sadly seldom extends out to very many processors.

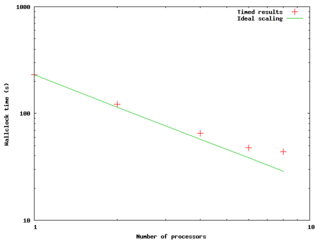

Strong Scaling Tests

The figure to the right and data below shows an example of a result of a small strong scaling test --- running a fixed-size problem on a varying number of processors to see how the timing of the computation scales with the number of processors. The code was an OpenMP code run on a node of the GPC. The quantitative results follow below; the times were measured and then speedups and efficiencies were calculated as above.

| 1 | 3:50 | - | - |

| 2 | 2:02 | 1.87x | 94 % |

| 4 | 1:05 | 3.52x | 88 % |

| 6 | 47.8 | 4.81x | 80 % |

| 8 | 43.6 | 5.28x | 66% |

The plot shows the compute time as a function of P; if the code maintained 100% parallel efficiency, we would expect the scaling to be

as 1/P, so we plot it on a log-log scale. Also shown is the ideal scaling case -- what the times would be if, using the

timing as a normalization, we did get 100% efficiency. We can see that past 4 cores the measured case starts to significantly deviate from the ideal, and it looks like things would only get worse past 8 cores.

It's important to note here that scaling tests should be done on realistic problem sizes and for realistic lengths of time. Generally, for either serial or parallel programs there will be some overhead both at initialization time and during the course of the computation; if the problem size is too small, the overhead during the course of the run might be a significant fraction of the real work, and the program will behave needlessly poorly. Similarly, if the number of timesteps or iterations is too small, the initizalization overhead will similarly play a spuriously large role in the performance.

The above behaviour is typical for a small computation; it won't scale to too many cores, and the efficiency becomes monotonically worse as one increases the number of cores in use. The rate at which this happens will depend on the problem size and the type of computation. How is one to tell where to stop; how good an efficiency is good enough? Certainly there are rules of thumb --- one shudders to see efficiencies below 50% --- but one can arrive at more meaningful and quantitative results by considering throughput. Let's imagine we had 64 cores at our disposal, and we wanted to run 96 jobs as quickly as possible. Our total time to completion of the 96 jobs would vary with the number of cores we ran per job as follows:

| Time for one job | Time for all 96 jobs | |

|---|---|---|

| 1 | 3:50 | 7:40 (2 batches, 64 jobs then 32) |

| 2 | 2:02 | 7:08 (3 batches, 32,32,32) |

| 4 | 1:05 | 6:30 (6 batches, 6x16) |

| 6 | 47.8 | 7:58 (10 batches, 9x10, 6) |

| 8 | 43.6 | 8:43 (12 batches) |

If we use more than 4 processes per job in this case, it will actually take us longer to do all our runs! For jobs that scale better with the number of processes (this could be a different program, or the same program with different problem size), we will find this turnover point to be at higher ; for jobs that scale worse, lower

.

Weak Scaling Tests

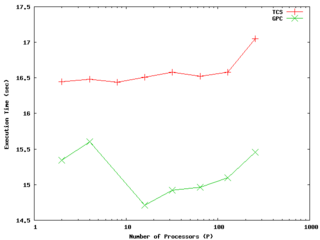

The strong scaling test described above considers the performance of a parallel code with a fixed work size as the number of processors varies; this tells us how the parallel overhead behaves as you go to more and more processors. A weak scaling test fixes the amount of work per processor and compares the execution time over number of processors. Since each processor has the same amount to do, in the ideal case the execution time should remain constant. While the strong scaling test tells you how the parallel overhead scales with , the weak scaling test tells you something weaker -- whether the parallel overhead varies faster or slower than the amount of work.

Nonetheless, the weak scaling test can be the relevant one for determining how large a problem size one can efficiently compute with a given parallel code and system. An example of results for a weak scaling test on the GPC and TCS up to 256 processors (8 nodes of the TCS, 32 of the GPC) is shown to the right. In this case we are maintaining extremely good efficiency up to at least 128 processors with constant work per process on both architectures. It is possible to see different behaviour when first filling up a node (eg, for less than 8 processes for the GPC, or 64 for TCS) than when one starts crossing nodes; one should understand this but it doesn't necessarily indicate problems.

Performance Tuning

You cannot improve what you cannot measure. Performance tuning is an iterative process between running an instrumented version of your code, getting data on performance throughout the code, and attempting to make chances to the code that will make it run more efficiently.

There are three main ways of instrumenting a code to find its performance. The first is manually adding timers around important parts of the code to find out how much time is spent in each part. This is worth thinking about doing when putting together a new code, as it means that you'll have a very robust way of finding out how well the different parts of the code perform on different platforms and with different compiler options, etc.. The results are, however, necessarily very coarse-grained; they are very useful for comparing performance under different situations, but give very little information about whether or not there are performance problems or what they might be.

The second technique is sampling, sometimes called `program counter sampling' or `statistical sampling'. In this case, the program is run in an environment where it is interrupted briefly at some set frequency (typically something like 100 times per second) and the location of the program counter is jotted down before the program is resumed. At the end of the program, these locations are translated into locations in the source code, and one has a statistical profile of where the program has spent its time.

Statistical sampling has several advantages. It has a very low overhead --- the sampling procedure for instance takes much less time than a function call to a timer routine --- so that the program runs much as it would without the measurement process. If the samples are taken often enough, the result is a very accurate picture of where your program is spending its time, allowing you to very quickly identify `hotspots' in the code and focus your attention on the most costly areas of the program. This combination of relevant information and low-overhead makes statistical sampling the first resort for serious performance measurement.

Sampling, however, has drawbacks. While it lets you know where the program is spending its time, it doesn't tell you why, or how it got there in the first place. For instance, in a parallel program you may be spending too much time in barriers of one sort or another (perhaps at MPI_WAITALL calls in MPI, or implicit barriers at the end of parallel sections in OpenMP) but unless you know where in the code that routine was called from, you can't address the problem. In this case you need some sort of trace through the program which keeps track of which routine called what. This is generally a much heavier-weight process, which can substantially increase the runtime of the code, running the risk of 'the Heisenburg effect' - measurement changing the system under observation. On the other hand, sometimes you just need that level of information, so tracing packages or libraries must be used.

A related method is the use of hardware counters --- counters within the CPU itself which keep track of performance-related information, such as the number of cache misses or branch mis-predictions within your code. Using this information, either regularly throughout the code or once for the entire code run can give very specific information about performance problems. Right now these counters are available on the TCS system but not on the GPC system, as the mainstream Linux kernel does not provide access to these counters.

Simple Timer Wrappers: C, FORTRAN

Below are some simple examples of timers in C and FORTAN which can be called before and after blocks of code to give wallclock times (in seconds) to give coarse-grained timings for sections of your code. Other approaches are possible.

Simple timers in C: <source lang=c> void tick(struct timeval *t) {

gettimeofday(t, NULL);

}

/* returns time in seconds from now to time described by t */ double tock(struct timeval *t) {

struct timeval now; gettimeofday(&now, NULL); return (double)(now.tv_sec - t->tv_sec) + ((double)(now.tv_usec - t->tv_usec)/1000000.);

} </source>

and how to use them: <source lang=c>

- include <sys/time.h>

struct timeval init, calc, io; double inittime, calctime, iotime;

/*... */

tick(&init);

/* do initialization */

inittime = tock(&init);

tick(&calc);

/* do big computation */

calctime = tock(&calc);

tick(&io); /* do IO */ iotime = tock(&io);

printf("Timing summary:\n\tInit: %8.5f sec\n\tCalc: %8.5f sec\n\tI/O : %8.5f sec\n",

inittime, calctime, iotime);

</source>

Simple timers in FORTRAN:

<source lang=fortran> subroutine tick(t)

integer, intent(OUT) :: t

call system_clock(t)

end subroutine tick

! returns time in seconds from now to time described by t real function tock(t)

integer, intent(in) :: t integer :: now, clock_rate

call system_clock(now,clock_rate)

tock = real(now - t)/real(clock_rate)

end function tock </source>

And using them: <source lang=fortran>

call tick(calc)

! do big calculation

calctime = tock(calc)

print *,'Timing summary' print *,'Calc: ', calctime

</source>

Command-line Performance Tools

Many of the tools below can be used to examine both serial and parallel performance problems with a code. We'd like to encourage you to tune serial performance first. Worrying about parallel performance before the code performs well with a single task doesn't make much sense! Profiling your code when running with one task allows you to spot serial `hot spots' for optimization, as well as giving you more detailed understanding of where your program spends its time. Further, any performance you make in the serial code will automatically speed up your parallel code.

We've already talked about coarse-grained measurements such as timers within the code and using tools such as /bin/time. These are very useful for comparing overall performance between different platforms/parameters, but we won't need to discuss them further here.

gprof (profiling: everywhere)

A statistical sampling workhorse is gprof, the GNU version of an old common Unix utility called prof. To use this, the code must be re-compiled with both source-code symbols intact (-g) and with profiling information available (for most compilers, this is -pg; for the IBM compilers (xlf, xlc, xlC) it is -p). It is worth knowing because of its ubiquity, and because it contains much of the functionality of newer tools so that the same concepts occur in other concepts.

So let's consider the following trivial program pi.c: <source lang="c" line enclose="div">

- include <stdio.h>

- include <stdlib.h>

- include <time.h>

double calc_pi(long n) {

long in = 0; long out = 0; long i; double x,y;

for (i=0; i<n; i++) {

x = drand48();

y = drand48();

if (x*x+y*y < 1) {

in++;

} else {

out++;

}

}

return 4.*(double)in/(double)(in+out);

}

int main(int argc, char **argv) {

long n, defaultn=100000; double pi; time_t t;

/* seed random number generator */ srand48(time(&t));

/* get number of tries */

if (argc < 2 || (n=atoi(argv[1]))<1) {

n = defaultn;

printf("Using default n = %ld\n", n);

}

pi = calc_pi(n);

printf("Pi = %lf\n", pi);

return 0;

} </source>

We can compile this with profiling on and run it:

$ gcc -g -pg -o pi pi.c $ ./pi 100000000 Pi = 3.141804

(Note that this isn't a very good way of calculating pi!). On exit, this program creates a file called gmon.out; this contains the profiling information about the run of the code. We can take a look at this by using gprof:

$ gprof pi gmon.out

Flat profile:

Each sample counts as 0.01 seconds.

% cumulative self self total

time seconds seconds calls ms/call ms/call name

100.88 1.00 1.00 1 998.76 998.76 calc_pi

index % time self children called name

1.00 0.00 1/1 main [2]

[1] 100.0 1.00 0.00 1 calc_pi [1]

-----------------------------------------------

<spontaneous>

[2] 100.0 0.00 1.00 main [2]

1.00 0.00 1/1 calc_pi [1]

-----------------------------------------------

The first part tells us that essentially all of the time spent running was in the calc_pi() routine (of course), and the second part attepts to be a call graph, showing that main called calc_pi() once. An important concept in the timing is the `self' and `children' times for each routine, sometimes called the inclusive and exclusive time. Because most routines call many other routines, its often useful to distinguish between the total amount of time spent between starting and ending the routine (the `inclusive' time) and that same time excluding the time spent in child routines (`exclusive' time).

The above results are fairly trivial and not very useful for this simple program, but in more complicated routines it can be very valuable to narrow down hotspots to particular regions of code.

In fact, gprof also allows you to view the time spent in the code by lines of code. As you chop the program up finer, the statistical sampling gets less accurate; thus to look at the results by line of code you must be sure that your sample run was long enough to get meaningful data. But the results can be extremely useful:

$ gprof --line pi gmon.out Flat profile: Each sample counts as 0.01 seconds. % cumulative self self total time seconds seconds calls Ts/call Ts/call name 70.31 0.70 0.70 calc_pi (pi.c:14 @ 40078b) 14.27 0.84 0.14 calc_pi (pi.c:17 @ 4007bc) 5.10 0.89 0.05 calc_pi (pi.c:11 @ 4007c1) 4.08 0.93 0.04 calc_pi (pi.c:15 @ 4007b5) 3.06 0.96 0.03 calc_pi (pi.c:13 @ 400781) 2.55 0.98 0.03 calc_pi (pi.c:12 @ 400777) 1.53 1.00 0.02 calc_pi (pi.c:11 @ 40076d) 0.00 1.00 0.00 1 0.00 0.00 calc_pi (pi.c:5 @ 40074c)

where now we can see that the single line containing the radius calculation (if (x*x+y*y < 1)) is 70% of the work for the entire program. This tells you where you should spend your time to optimize the code. Other tools exist for this sort of line-by-line analysis; gcov in the gcc compiler suite counts the number of times a given source line is executed - the idea was for coverage analysis for test suites, but it certainly can be used for profiling as well; however, usually the amount of time spent at a line is more important than the number of executions.

For parallel programs, gprof will generally output a seperate gmon.out file for each process; for threaded applications, output for all threads will be summed into the same gmon.out. It may be useful to sum up all the results and view them with gprof or to look at them individually.

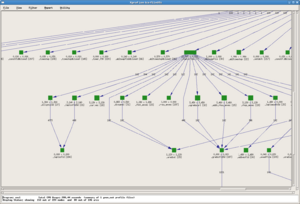

There are other tools for looking at the same data. For instance, on the TCS system, the command Xprof (run the same way as gprof; Xprof program_name gmon.out) lets you look at the call tree as a graphical tree. Each routine is shown by a block with a size proportional to the time spent in each routine; the width is the inclusive time, and the height is the exclusive time.

hpmcount (performance counters: TCS)

On the TCS, hpmcount allows the querying of the performance counter values over the course of a run. Since here we are simply asking the CPU to report values it obtains during the run of a program, the code does not need to be instrumented; simply typing

hpmcount hpmcount_args program_name program_args

will run the program and output the results from the hardware performance counters at the end. So for instance, with our trivial pi program above,

tcs-f11n05-$ hpmcount ./pi Using default n = 100000 Pi = 3.144240 Execution time (wall clock time): 0.020325 seconds ######## Resource Usage Statistics ######## Total amount of time in user mode : 0.012754 seconds Total amount of time in system mode : 0.001486 seconds Maximum resident set size : 440 Kbytes Average shared memory use in text segment : 0 Kbytes*sec Average unshared memory use in data segment : 0 Kbytes*sec Number of page faults without I/O activity : 53 Number of page faults with I/O activity : 1 Number of times process was swapped out : 0 Number of times file system performed INPUT : 0 Number of times file system performed OUTPUT : 0 Number of IPC messages sent : 0 Number of IPC messages received : 0 Number of signals delivered : 0 Number of voluntary context switches : 6 Number of involuntary context switches : 0 ####### End of Resource Statistics ######## Set: 1 Counting duration: 0.014947083 seconds PM_FPU_1FLOP (FPU executed one flop instruction ) : 400093 PM_FPU_FMA (FPU executed multiply-add instruction) : 500030 PM_FPU_FSQRT_FDIV (FPU executed FSQRT or FDIV instruction) : 1 PM_CYC (Processor cycles) : 58485795 PM_RUN_INST_CMPL (Run instructions completed) : 24238152 PM_RUN_CYC (Run cycles) : 70307511 Utilization rate : 61.172 % Flop : 1.400 Mflop Flop rate (flops / WCT) : 68.888 Mflop/s Flops / user time : 112.614 Mflop/s FMA percentage : 111.103 %

There are a variety of sets of performance counters that can be reported; the default set isn't especially helpful for HPC-type computations; sets of performance counters can be specified on the commandline in the format -d -s item,item,item. Sets 5 and 12 are very useful for showing memory performance (showing L1 and L2 cache misses) and set 6 is especially useful for shared memory profiling, giving statistics about how often off-processor memory had to be accessed.

Showing the counters for the entire program will often tell you if there's a problem or not, but won't tell you where it is. For more detailed information, one can use the hpm library to manually instrument different regions of your code, and get similar outputs to above for several different, smaller, regions of code.

On the linux side, oprofile allows the reporting of similar information, but to use it one must have root access to the linux machine.

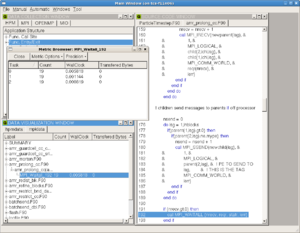

cachegrind (Memory use analysis: GPC)

valgrind is a memory tool that is usually thought of in terms of finding memory-access bugs in large programs. Rather than instrumenting a code or measuring counters, valgrind takes a fairly extreme approach -- it emulates your program running on a computer, essentially running a simulation of your program running on the same kind of computer valgrind is running on. This has enormous overhead (runtimes can be up to 20x as long as normal) but the result is exquisitely detailed information about what your program is doing.

Memory access is often a bottleneck for HPC codes, and cachegrind is a tool for valgrind which simulates the use of cache in your program, giving you line-by-line information on which parts of the code have cache performance issues. Your code does not need to be recompiled, although compiling with -g is necessary for the output to be useful. Cachegrind is run as shown:

valgrind --tool=cachegrind myprogram myprogram_arguments

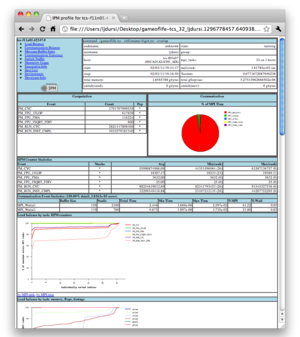

Overall results for the whole program are given at the end of the programs normal output, and more detailed information is saved in a file that begins with cachegrind.out. These output files are XML files - readable in principle by humans, but it is much easier to see what is going on with visual tools like kcachegrind (shown to the right) or, eventually, valkyrie (which also can be used for memcheck output.)

IPM (MPI Tracing and hardware counters: GPC, TCS)

IPM is the [Integrated Performance Monitors http://ipm-hpc.sourceforge.net/] which monitor a variety of MPI and hardware performance information. There are a number of modules for IPM (Integrated Performance Monitors) on the TCS and GPC depending on which machine and compilers/MPI you use.

These can be linked in to your MPI executable at link- or run-time, and generate detailed output at the end of your run which can be parsed and produce a nice set of HTML + graphics.

Running IPM varies slightly on the GPC and the TCS. In the GPC, because the default for the MPI libraries is to be compiled in as dynamic, shared libraries, it is easiest just to compile your program as normal and link in these libraries only when you are about to run your program. In your submission script, run your program as so:

module load ipm/[appropriate-version]

export LD_PRELOAD=${SCINET_IPM_LIB}/libipm.so

mpirun [...]

export LD_PRELOAD=

On the other hand, on TCS, it is easist to link in the IPM libraries at link time, with

...-L${SCINET_IPM_LIB} -lipm

Either way, once the once the program is finished, in the directory it is run in there will be a large XML file with an ugly name like

[username].[longnumber]

to turn this into useful data, do:

$ module load ipm/[appropriate-version] $ ipm_parse -html [username].[longnumber]

That will produce a directory named something like

[executable].[username].[number].[node]

which you can copy back to your computer and view the index.html file within for lots of detailed info about your run.

Note that on the GPC, there are IPM modules for "posix" and "mpiio". Which of these you use only matters if you want to try to do I/O profiling as well as MPI profiling. If you do want to do IO tracing, you will have to be sure to choose the right module variant (mpiio if you do parallel I/O, either manually with MPI-I/O or through a parallel HDF5 or NetCDF library, and posix otherwise) and statically link the library into your executable at compile time:

...-L${SCINET_IPM_LIB} -lipm

Graphical Performance Tools

While graphical performance tools typically measure similar things to their command-line relations, a graphical display opens up the possibility of aggregating much more information and having increased flexibility in displaying it in a variety of ways; this can be very helpful, especially in the initial stages of finding performance problems.

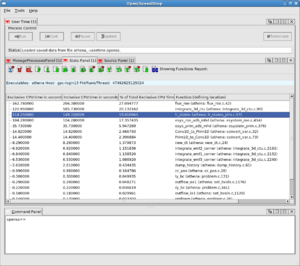

OpenSpeedShop (profiling, MPI tracing: GPC)

OpenSpeedShop is a tool that is installed on GPC; it is currently compiled for support only with gcc and openmpi. To use it,

module load gcc python intel openmpi openspeedshop

It provides the functionality of gprof, with the addition of hardware counter measurements (not currently supported on GPC machines) and options to do both lightweight and more detailed, more heavy-weight profiling. OpenSpeedShop also contains enhanced support for dealing with parallel runs, and for tracing of MPI or I/O calls to find performance problems to those areas. The parallel support is considerably more than what gprof offers; bundling the data from thousands of tasks into one set of results is a significant algorithmic challenge in itself.

Another important addition, shared by many of the other graphical tools, is the idea of bundling results into different `experiments' --- bundles of an executable, measurement type, and resulting data --- which makes the iterative process of performance tuning much easier. OpenSpeedShop, as with some other tools, has the ability to directly compare the results of different experiments, so one can more easily see if a particular change made things better or worse, and if so where.

OpenSpeedshop does not require re-compilation of the executable (although as with all these tools, for the correlation with the source code to be useful, the code should be compiled with debugging symbols, the option for which is almost universally -g to the compiler and linker. The code is then either instrumented, or run in an instrumented environment. Shown to the right are two of the views available for exmaining the timing results of one OpenMP code.

OpenSpeedShop can be launched from the commandline and then used entirely through the gui: there are a variety of `wizards' which guide you through choosing how to instrument and run your experiment:

$ openss

this should be done from a directory containing the source code and the executable. This is an excellent way to get started with this tool. Once one is more familiar with the tool, one can run a variety of experiments on the command line:

openss –f program_name pcsamp

where the above runs the pcsamp (program counter sampling, as in gprof) measurement on the executable program_name. Then one can launch the gui to view the results. There are options for instrumenting the executable in a variety of ways, and taking different measurements; the OpenSpeedShop web page contains links to documentation and tutorials.

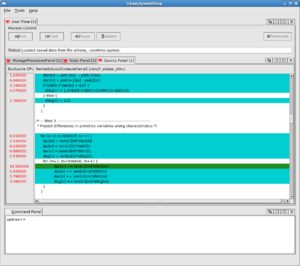

PeekPerf (profiling, TCS)

Peekperf is IBM's single graphical `dashboard' providing access to many performance measurement tools for exmaining Hardware Counter data, threads, message passing, IO, and memory access, several of which are available seperately as command-line tools. Like OpenSpeedShop, it does not require re-compilation of the executable; an instrumented version of the code is generated at run time and this instrumented version is executed with whatever options you care to pass to it. It does not have the same support for comparing experiments as OpenSpeedShop does, however it allows running several different types of measurements at once, seeing how they correlate in a given run; this is something OpenSpeedShop doesn't have.

One starts peekperf at the commandline

$ peekperf

and tell peekperf which executableyou wish to run measurements on. You then highlight which sorts of measurements you wish to make (which sorts are available depend on the type of program - threaded, OpenMPed, etc), select `generate an instrumented executable', and then `run the instrumented executable', giving it the name of either the instrumented executable or a script to run the same; the program will then begin displaying the resulting data as soon as the program has completed.

Understanding the interface and resulting data takes some practice, and the documentation is quite sparse; however the flexibility in the range of measurements to take makes this an excellent source of performance information for programs running on the TCS system.

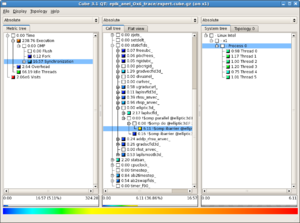

Scalasca (profiling, tracing: TCS, GPC)

Scalasca is a sophisticated tool which takes the aggregation of data shown in the above graphical tools one step further and analyzes the results to pinpoint and display common performance problems; it scales extremely well and the graphical display makes it very easy for the user to find out where the performance issues are. To use scalasca, on either the TCS or the GPC load the scalasca module:

$ module load scalasca

Scalasca requires the code to be recompiled, and it has wrapper scripts to select and choose the right options to use. If your code for instance is normally compiled with

ifort -c myprog.f ifort -o myprog myprog.o -lm

then one can instead use

scalasca -instrument ifort -c myprog.f scalasca -instrument ifort -o myprog myprog.o -lm

Note that with the intel compilers, if you're using OpenMP, you'll have to add the -pomp flag, eg scalasca -instrument -pomp.

Scalasca then parses the rest of the command line and adds the necessary flags. (If you are curious, scalasca -instrument -v will show you what the resulting command line actually is.) There is also a shortcut, skin, which is equivalent to scalasca -instrument.

When the new executable is generated, it's run in a similar way; if you normally run your program as

./myprog

or

mpirun -np 5 ./myprog

You'd instead do

scalasca -analyze ./myprog scalasca -analyze mpirun -np 5 ./myprog

The program will run as usual with only additional lines about output to files. (Again, there is a shortcut available; scan is equivalent to scalasca -analyze.) To then look at the results with a graphical user interface, one uses

scalasca -examine [epik directory name]

where the directory name is that created by the analyze program. This tries to pop up an xwindow; from an xterm on a linux or Mac machine, you will have to login via

$ ssh -Y login.scinet.utoronto.ca scinet01$ ssh -Y [whichever devel node you're using]

and you will have to run this from one of the devel nodes, rather than one of the compute nodes.

A screenshot of the results is shown to the right for an OpenMP program, where wait times at implicit barriers at the end of parallel sections is selected as an important metric to show on the left; the middle panel shows the call tree indicating the context in which the delays occurred, and the panel on the right gives the breakdown for each thread.