User Codes

Astrophysics

Athena (explicit, uniform grid MHD code)

Athena is a straightforward C code which doesn't use a lot of libraries so it is pretty straightforward to build and compile on new machines.

It encapsulates its compiler flags, etc in an Makeoptions.in file which is then processed by configure. I've used the following additions to Makeoptions.in on TCS and GPC:

<source lang="sh"> ifeq ($(MACHINE),scinettcs)

CC = mpcc_r LDR = mpcc_r OPT = -O5 -q64 -qarch=pwr6 -qtune=pwr6 -qcache=auto -qlargepage -qstrict MPIINC = MPILIB = CFLAGS = $(OPT) LIB = -ldl -lm

else ifeq ($(MACHINE),scinetgpc)

CC = mpicc LDR = mpicc OPT = -O3 MPIINC = MPILIB = CFLAGS = $(OPT) LIB = -lm

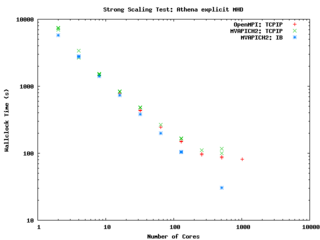

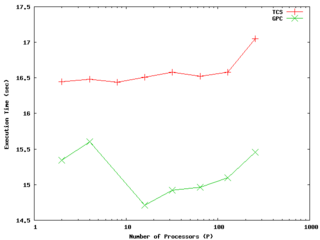

else ... endif endif </source> It performs quite well on the GPC, scaling extremely well even on a strong scaling test out to about 256 cores (32 nodes) on Gigabit ethernet, and performing beautifully on InfiniBand out to 512 cores (64 nodes).

-- ljdursi 19:20, 13 August 2009 (UTC)

FLASH3 (Adaptive Mesh reactive hydrodynamics; explict hydro/MHD)

FLASH encapsulates its machine-dependant information in the FLASH3/sites directory. For the GPC, you'll have to

module load intel module load openmpi module load hdf5/183-v16-openmpi

and with that, the following file (sites/scinetgpc/Makefile.h) works for me: <source lang="sh">

- Must do module load hdf5/183-v16-openmpi

HDF5_PATH = ${SCINET_HDF5_BASE} ZLIB_PATH = /usr/local

- ----------------------------------------------------------------------------

- Compiler and linker commands

- We use the f90 compiler as the linker, so some C libraries may explicitly

- need to be added into the link line.

- ----------------------------------------------------------------------------

- modules will put the right mpi in our path

FCOMP = mpif77 CCOMP = mpicc CPPCOMP = mpiCC LINK = mpif77

- ----------------------------------------------------------------------------

- Compilation flags

- Three sets of compilation/linking flags are defined: one for optimized

- code, one for testing, and one for debugging. The default is to use the

- _OPT version. Specifying -debug to setup will pick the _DEBUG version,

- these should enable bounds checking. Specifying -test is used for

- flash_test, and is set for quick code generation, and (sometimes)

- profiling. The Makefile generated by setup will assign the generic token

- (ex. FFLAGS) to the proper set of flags (ex. FFLAGS_OPT).

- ----------------------------------------------------------------------------

FFLAGS_OPT = -c -r8 -i4 -O3 -xSSE4.2 FFLAGS_DEBUG = -c -g -r8 -i4 -O0 FFLAGS_TEST = -c -r8 -i4

- if we are using HDF5, we need to specify the path to the include files

CFLAGS_HDF5 = -I${HDF5_PATH}/include

CFLAGS_OPT = -c -O3 -xSSE4.2 CFLAGS_TEST = -c -O2 CFLAGS_DEBUG = -c -g

MDEFS =

.SUFFIXES: .o .c .f .F .h .fh .F90 .f90

- ----------------------------------------------------------------------------

- Linker flags

- There is a seperate version of the linker flags for each of the _OPT,

- _DEBUG, and _TEST cases.

- ----------------------------------------------------------------------------

LFLAGS_OPT = -o LFLAGS_TEST = -o LFLAGS_DEBUG = -g -o

MACHOBJ =

MV = mv -f

AR = ar -r

RM = rm -f

CD = cd

RL = ranlib

ECHO = echo

</source>

-- ljdursi 22:11, 13 August 2009 (UTC)

Aeronautics

Chemistry

Climate Modelling

Medicine/Bio

High Energy Physics

Structural Biology

Molecular simulation of proteins, lipids, carbohydrates, and other biologically relevant molecules.

Molecular Dynamics (MD) simulation

DESMOND

GROMACS

Download and general information: http://www.gromacs.org

Search the mailing list archives: http://oldwww.gromacs.org/swish-e/search/search2.php

-- cneale 18 August 2009